The Impact of Generative AI on Productivity - Results of an Early Meta-Analysis

Generative AI, Productivity, Meta-Analysis

Acknowledgments: We would like to thank Tomas Havranek, Bob Reed and participants to Tomas Havranek’s meta-analysis course at the University of Canterbury, Christchurch for comments.

I. Introduction.

Over the last two years, there has been an explosion of academic literature on the economic impact of Generative AI (GenAI). In this paper, we take stock of part of this literature by doing a meta-analysis of the results of 34 papers that focus on the impact of Gen AI on productivity.

We focus on productivity in a work environment by selecting papers that investigate how the introduction of GenAI affects the quantity and quality of work done, when GenAI is applied to real-life tasks rather than academic tasks or assignments, and typically (but not always) by employees rather than by students.

A meta-analysis of these studies allow us to identify the range of estimates available in the literature, judge the external validity of individual studies, as well as to compute a meta-analytic average of these estimates, reflecting the ‘best’ estimate based on the literature as a whole. Having such numbers is important when estimating or developing scenarios for the macro-economic impact of GenAI. When analyzing the impact of GenAI on economic growth, Briggs and Kodnani (2023) uses estimates from academic studies of the impact on productivity of non-generative AI. Similarly, Acemoglu (2024) multiplies the average of the estimates from only 2 studies (0.27, estimates from Noy and Zhang (2023) and Brynjolfsson, Li, and Raymond (2024)) with the share of jobs exposed to GenAI (0.57) to estimate that “the average (overall) cost savings from AI are about 0.27 × 0.57 = 0.154”.

Based on more than 1000 estimates from 34, rather than 2, studies, and after controlling for publication bias, our preferred specification suggests an GenAI on average increases productivity by about 17%. We further show substantial heterogeneity in reported estimates suggesting context matters a lot, with weak evidence suggesting quantitative measures of productivity (like time spent producing an item or number of items produced in a given amount of time) experiencing a bigger impact, measured in percentage change, than qualitative measures of productivity (like quality of work produced). There is also some evidence that experimental studies show higher partial correlations between GenAI use and productivity than quasi-experimental studies.

Section II explains how we selected studies and section III discusses the characteristics of these studies. We then discuss different ways to aggregate the estimates (section IV), how we correct for publication bias (section V) and analyze how study characteristics affect estimates (section VI). Section VII to X repeat these steps for a different outcome measure, the partial correlation (Fisher Z) between GenAI use and productivity, rather than the estimated percentage change in productivity. Section XI concludes.

II. Finding studies that estimate the impact of GenAI on Productivity.

To find relevant studies, we conducted a systematic search of literature on Gen AI and productivity using Scopus and Google Scholar.

We started from the primary keywords “Gen AI” and “productivity”, restricting the search to titles, abstracts, and keywords to ensure greater accuracy in both the initial and subsequent expanded searches.1

We selected Scopus as the initial database as the keyword analysis tool embedded in Scopus can automatically extract keywords from retrieved studies, enabling one to efficiently identify additional relevant keywords. We iteratively expanded the initial search query and continued searching until no new relevant keywords emerged. This led to an expanded search query –“TITLE-ABS-KEY (”Gen* AI” OR “generative artificial intelligence” OR “large language model” OR “LLM” OR ChatGPT OR Copilot) AND TITLE-ABS-KEY (productivity OR performance OR quality OR quantity)”.

We restricted the search to English-language literature and limited the publication years to 2023 and later, considering the widespread adoption of ChatGPT since 2023. As a result, we retrieved 687 studies on Scopus.

We then conducted a Google Scholar search using the expanded query and retrieved the first 500 results sorted by relevance. We combined all retrieved studies and manually reviewed the titles and abstracts and skimmed the content of the retrieved studies. To be eligible, studies had to use empirical data or real-world experiments to demonstrate the actual impact of GenAI on productivity outcomes in workplace settings. We excluded studies that focused, for example, on GenAI’s effect on perceived productivity of workers or course outcomes of students. As a result, searching with keywords helped us find 30 relevant studies.

Finally, we checked the references of the selected studies to track additional eligible studies. This helped us identify 4 additional studies. In total, we retained 34 eligible studies.

III. Descriptive Statistics

As a starting point, we classified, based on the abstract, each study as finding either a positive effect, a mixed effect or finding no effect. Based on the abstract, 23 studies suggest GenAI improves productivity, 10 find mixed effects, with GenAI increasing productivity for some tasks or skill-levels but decreasing for others, while one study found no effect (Butler et al. (2024)). 12 studies include a measure of the percent change in productivity in their abstract, showing an average productivity improvement of 23.2%.

Twenty-one papers included results about who benefited most from GenAI tools. 15 papers concluded that GenAI tools benefit those with lower skills/experience the most, 4 found who benefited most depended on the specific outcome measure, 1 found GenAI benefited high performers most (Otis et al. (2024)) and 1 found it benefited all to a similar extent (Haslberger, Gingrich, and Bhatia (2024)).

It is further worth mentioning that 10 studies mentioned they used GenAi tools for their study. Besides for copy-editing, GenAI has been used for graphs (Dell’Acqua et al. (2024), Wiles et al. (2024) ), to classify observations into categories (Wiles et al. (2024), Brynjolfsson, Li, and Raymond (2024), Yeverechyahu, Mayya, and Oestreicher-Singer (2024)) or grade content or performance (Wiles et al. (2024), Freeman et al. (2024), Haslberger, Gingrich, and Bhatia (2024)). We have used, with mixed success, ChatGPT to extract estimates from tables, and search for and understand information about meta-analytic techniques and standard errors.

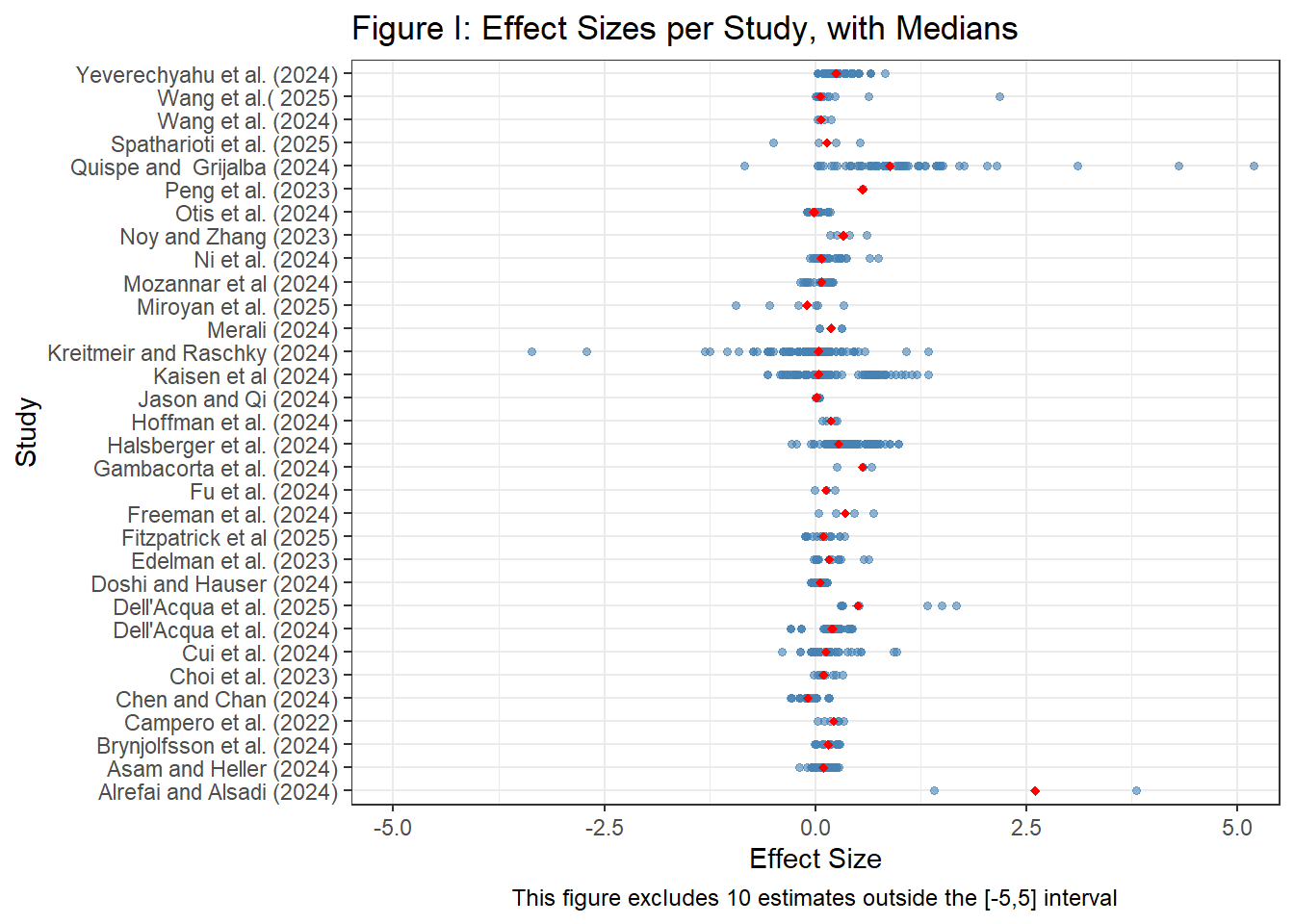

Figure I and Table I further highlights the diversity across the (32) studies for which we have estimates in terms of percentage changes.

Figure I and Table I further highlights the diversity across the (32) studies for which we have estimates in terms of percentage changes.

For each of the identified studies, we coded, besides estimates and standard errors2, the following study-level characteristics.

Is the study experimental or not? 25 studies randomly provided access to a GenAI tool to some subjects (for example, Peng et al. (2023) or Choi, Monahan, and Schwarcz (2023)) but others (7 studies) used quasi-experiments like the introduction of ChatGPT in only some countries (Quispe and Grijalba (2024)), the ban on ChatGPT in Italy (Kreitmeir and Raschky (2024)), CoPilot only affecting software start-ups but not non-software related start-ups (Asam and Heller (2024)), CoPilot being introduced early to some programmers (Hoffmann et al. (2024)) or the availability of CoPilot for Python but not for R (Yeverechyahu, Mayya, and Oestreicher-Singer (2024)).

What is the main sector in which the work takes place? The most frequent (10 studies) task was coding (software industry), but other tasks included designing advertisements (Fu et al. (2024)), product innovation (Dell’Acqua et al. (2025)), blogging (Kaisen, Li, and Lu (2024)), office tasks (Freeman et al. (2024)), customer support (Ni et al. (2024)), writing short stories(Doshi and Hauser (2024)), tutoring (R. E. Wang et al. (2025)) and running a start-up (Otis et al. (2024)).

For what specific GenAI tool is the impact on productivity analysed? Most studies looked at the impact of ChatGPT(19, for example, Quispe and Grijalba (2024) or Freeman et al. (2024)), or CoPilot (for example, Hoffmann et al. (2024) or Butler et al. (2024)), while few looked at company-specific GenAI tools (for example, Ni et al. (2024)).

Note there are many other dimensions in which these estimates differ. For example, some papers use control variables in a regression analysis, while other do not. Or some estimates were obtained using clustered standard errors, while others not. Given we have a a relatively small number of papers, our ability to figure out what characteristics cause estimates to be different is limited and hence one should be cautious when interpreting differences in estimate sizes across estimate or paper characteristics.

Besides characteristics of the studies, we also looked at characteristics of the outcome variable used, and subdivided estimates into impacts on quantitative outcomes (28 studies) like speed or number of items produced (for example, Gambacorta et al. (2024) counts the number of lines of code produced over a period of 6 weeks, or Asam and Heller (2024) uses time-to-initial funding for start-ups) and impacts on qualitative measures (28 studies, for example, Doshi and Hauser (2024) measures the creativity of stories produced with or without the help of GenAI, or Fu et al. (2024) measures the ‘usefulness’ of designs).

The fact that different studies use different outcome measures makes it hard to compare estimates across studies. For example, how can one compare a study that finds GenAI increases the output by 5 lines of code per hour to a study that fins GenAI increases the creativity score by 1 on a scale from 0 to 10?

To have a measure of the impact on productivity that is comparable across studies, we transformed, where possible, estimates to percentage changes, relative to the base category of productivity when no GenAI tool was available. Unfortunately, not all studies provide enough information to compute this measure.3

We therefore also use a more standard approach in the meta-analysis literature by focusing on the correlation between the outcome and the treatment, through partial correlation coefficients or Fisher Z scores, which are derived from estimated t-statistics. So we will present two sets of estimates, the first one being the more intuitive measure (percent changes, table I), the second (Fisher Z scores, table VI below) being available for more estimates.

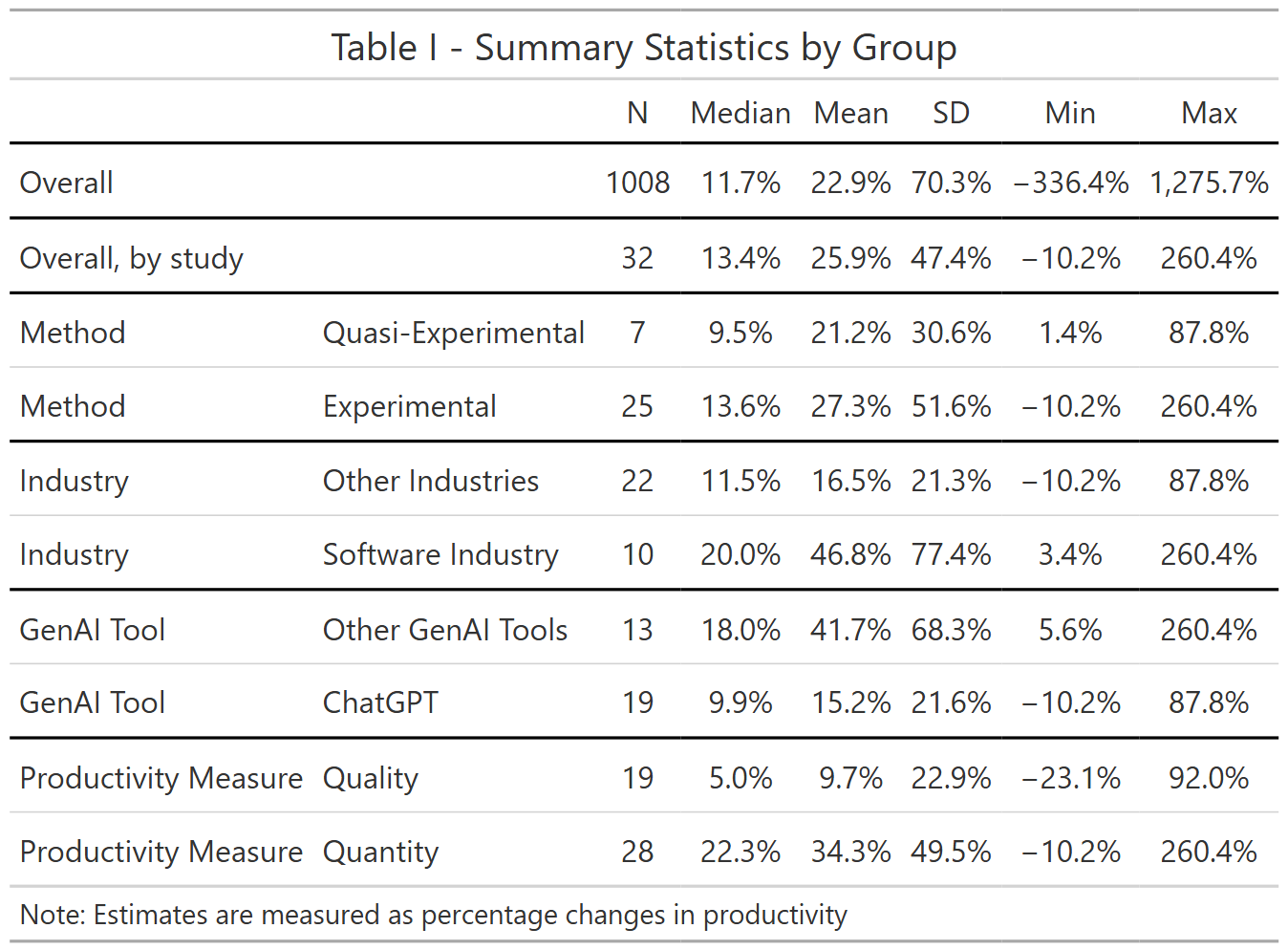

Table I shows we have a total of 1008 estimates from 32 studies, with a median estimate of 11.7% and a mean of 22.9%. Aggregating estimates first by studies (so studies with more estimates do not get more weight) and then across studies (the ‘overall, by study’ statistics) shows similar results. It’s also worth noting that standard deviation and the range of estimates is very big, reflecting the heterogeneity in outcomes across studies.

Table I further suggests that experimental studies find somewhat higher productivity improvements than quasi-experimental studies, that studies set in the software industry or not focusing on ChatGPT find higher productivity improvements and finally, that quantitative outcomes show bigger impacts than qualitative outcomes. Of course, as mentioned above, one should be careful interpreting these differences as causal given the correlation between these and other, omitted, characteristics.

IV. Weighing Estimates to Get an Overall Estimate.

So far we focused on the heterogeneity in the estimates. But how can we now best aggregate these results to get one number that summarizes the findings of the literature? There are multiple ways one can aggregate estimates across studies. Table I provided statistics that weighed all estimates equally (the ‘overall’ statistics) or weighed estimates so all studies had the same weight, independent of how many estimates came from a given study (the ‘overall, by study’ statistics). However, one could argue that a more efficient estimate can be obtained if more precisely estimated estimates would be given a higher weight. In the meta-analysis literature, several ways to weigh estimates have been proposed. In table II below, we provide, in addition to the OLS estimate (which corresponds to the mean estimate of Table I), a fixed effect estimate, a random effect estimate and two 3-level estimates.

The OLS model assumes that the estimate i (out of N) comes from a distribution with mean \(\beta_0\) and variance \(\sigma^2\).

\[ Estimate_i = \beta_0 + \epsilon_i, \quad \epsilon_i \sim N(0, \sigma^2), \quad i = 1, \dots, N \]

All estimates are thus assumed to come from the same distribution, and the only reason why estimates differ is because of sampling error.

The fixed effects model maintains the assumption of one single true effect but relaxes the assumption of homoscedasticity and gives higher weight to more precise estimates (that is, weighted least squares).

\[ Estimate_i = \beta_0 + \epsilon_i, \quad \epsilon_i \sim N(0, se(Estimate_i)^2), \quad i = 1, \dots, S \]

The random effect allows for an additional normally distributed random effect with mean \(\theta_i\) and variance \(\tau^2\).

\[ Estimate_i = \beta_0 + \theta_i + \epsilon_i, \quad \theta_i \sim N(0, \tau^2), \quad \epsilon_i \sim N(0, se(Estimate_i)^2), \quad i = 1, \dots, S \]

In other words, there is unique true effect for each estimate which consists of the common effect \(\beta_0\) and a draw from a common normal distribution.

The three level, hierarchical effects meta-analysis, further modifies this equation to recognize that estimates from the same study are likely to have a common effect.

\[ Estimate_{ij} = \beta_0 + \phi_{ij} + \theta_{i} + \epsilon_{ij}, \quad \theta_j \sim N(0, \tau^2), \quad \phi_{ij} \sim N(0, \upsilon^2), \quad \epsilon_{ij} \sim N(0, se(Estimate_{ij})^2), \quad i = 1, \dots, S, \quad j = 1, \dots, G \]

So in addition to the common effect \(\beta_0\) and the random estimate level effect \(\theta_i\), there is also a study level effect, \(\phi_{ij}\) .

Finally, he 3L-VCV model, also known as the correlated and hierarchical effects meta-analysis further allows sampling errors to be correlated at the study level. The later correlation is not estimated, however, instead it needs to be imposed. We chose a value of 0.15 as this minimized the Akaike Information Criterion (AIC) and hence fitted the data well.

Table II - Averaging Estimates using Various Weights | |||||

|---|---|---|---|---|---|

| OLS | Fixed Effects | Random Effects | 3L Effects | 3L-VCV Effects |

overall | 0.229 | 0.014 | 0.172 | 0.200 | 0.167 |

(0.096*) | (0.000***) | (0.045**) | (0.042***) | (0.031***) | |

Num.Obs. | 1008 | 1008 | 1008 | 1008 | 1008 |

AIC | 2154.2 | 27085.7 | 595.7 | 208.1 | 137.0 |

Notes: The estimated 'overall' effect is the result of a regression of the estimate of the impact of GenAI on productivity, measured as percentage changes in productivity, on a constant. The different models make different assumptions about the error terms as described in the text. | |||||

Table II shows that if we assume all estimates come from the same distribution, the best estimate is an extremely small impact. However, assuming estimates come from the same distribution implies one will give lots of weight to the most precise studies and hence, a handful of studies can have a huge impact on the estimate. Given the diversity of the papers in the sample, the fixed effect assumption is further unlikely to hold and the random effects estimator makes more sense.4 The random effect is closer to the OLS estimate and suggests a 17.2% improvement in productivity associated with GenAI. The random effects estimator assumes all estimates are independent, but since many estimates come from the same study, the 3 level estimators, which allow for correlations between groups of estimates, are more realistic. Based on the minimum AIC criterion, our preferred estimate of the overall average comes from the 3-level VCV model which shows using GenAI increases productivity by 16.7%.5

V. Correcting for Publication Bias

So far, we assumed that there was no publication bias: that is, researchers are as likely to publish significant and insignificant results. However, in reality researchers might be less likely to show insignificant results as they know there is a disproportionate interest for significant results.

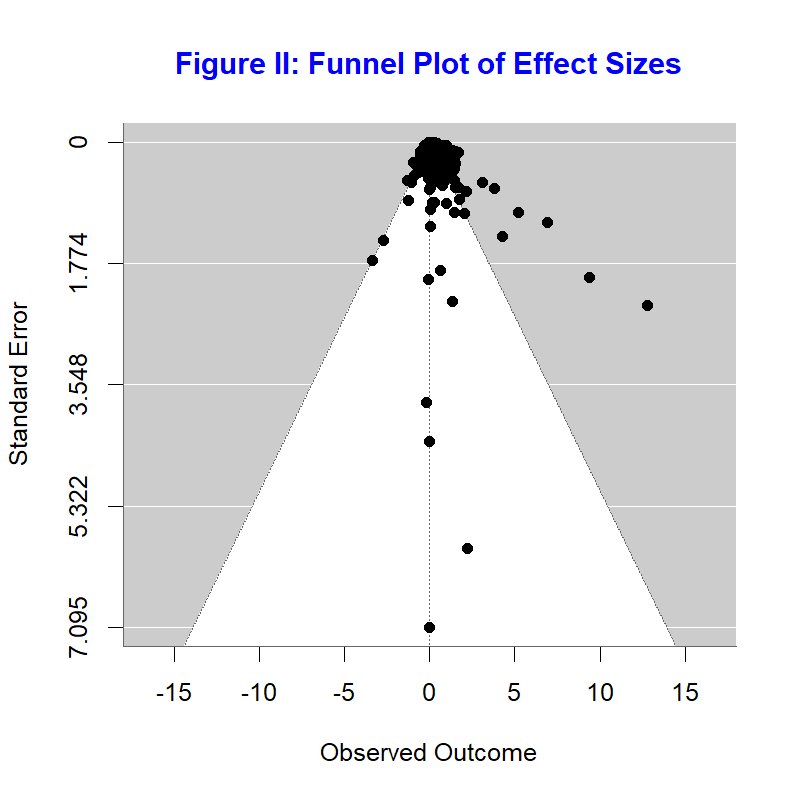

The standard way to check for publication bias is the funnel plot. In the absence of publication bias, there should not be a relationship between the estimate and its standard error. The funnel plot in figure II plots the preciseness of the estimate against the estimated effect size.

If there is no publication bias one should see most estimates in the funnel. In our case, we see more estimates at the right side of the funnel than at the left-side, suggesting there could be publication bias.

Table III - Publication Bias - FAT-PET | |||||

|---|---|---|---|---|---|

| OLS | Fixed Effects | Random Effects | 3L Effects | 3L-VCV Effects |

intercept | 0.158 | 0.013 | 0.107 | 0.143 | 0.105 |

(0.039***) | (0.000***) | (0.046*) | (0.047**) | (0.030**) | |

St. Error | 0.469 | 2.614 | 0.871 | 0.655 | 1.014 |

(0.742) | (0.698**) | (0.827) | (0.607) | (0.398*) | |

Num.Obs. | 1008 | 1008 | 1008 | 1008 | 1008 |

AIC | 2081.1 | 20305.5 | 517.9 | 169.8 | 98.3 |

Notes: The estimated 'overall' effect is the result of a regression of the estimate of the impact of GenAI on productivity, measured as percentage changes in productivity, on a constant and the standard error of the estimate, to control for publication bias. The different models make different assumptions about the error terms as described in the text. | |||||

To incorporate publication bias in the weighting models described above, we include the standard error of estimates in the regression models (the so-called FAT-PET-test). This variable will allow to control for the possible link between the standard error and the estimated effect size. In this regression, the intercept then becomes the estimated effect size in case of zero publication bias.

Table III shows some mixed results in terms of publication bias: while all coefficients are positive, suggesting more precise estimates (which have smaller standard errors) tend to show smaller effect sizes, the estimates are not always statistically significant. Once we control for the potential bias, the estimate of the overall effect become somewhat smaller, from 16.7% for the 3 level VCV estimate which assumes no publication bias, to 10.5% once publication bias is taken into account.

VI. PEESE Analysis

The traditional next step after finding that the overall effect is different from zero, is to do a regression including the square of the standard error as a control rather than the standard error itself, the idea being that theoretically publication bias should be non-linearly related to the estimated effect size. This is the so-called PEESE test.

Table IV - Publication Bias - PEESE | |||||

|---|---|---|---|---|---|

| OLS | Fixed Effects | Random Effects | 3L Effects | 3L-VCV Effects |

intercept | 0.221 | 0.014 | 0.168 | 0.195 | 0.167 |

(0.090*) | (0.000***) | (0.041**) | (0.038***) | (0.031***) | |

St. Error^2 | 0.039 | 1.167 | 0.332 | 0.245 | 0.271 |

(0.070) | (1.229) | (0.544) | (0.407) | (0.253) | |

Num.Obs. | 1008 | 1008 | 1008 | 1008 | 1008 |

AIC | 2142.5 | 26835.3 | 578.7 | 199.8 | 128.8 |

Notes: The estimated 'overall' effect is the result of a regression of the estimate of the impact of GenAI on productivity, measured as percentage changes in productivity, on a constant and the square of the standard error of the estimate, to control for publication bias. The different models make different assumptions about the error terms as described in the text. | |||||

Interestingly, the PEESE results in table IV suggest there is no significant non-linear publication bias, and hence bring our preferred 3-level VCV estimates back up to a 16.7% increase in productivity.

VII. Heterogeneity Analysis

To further try to explain why estimates are different we next include 4 dummies, 3 reflecting differences between studies, whether the study was experimental or not, whether the study was focusing on the Software Industry or not and whether ChatGPT was the GenAI tool or not, and one regression-level variable, whether the outcome was a measure of quantity or not.

Table V - Heterogeneity Analysis | |||||

|---|---|---|---|---|---|

| OLS | Fixed Effects | Random Effects | 3L Effects | 3L-VCV Effects |

intercept | 0.272 | 0.027 | 0.126 | 0.157 | 0.103 |

(0.308) | (0.054) | (0.140) | (0.166) | (0.131) | |

St. Error^2 | 0.041 | 0.948 | 0.334 | 0.243 | 0.247 |

(0.070) | (1.108) | (0.505) | (0.400) | (0.247) | |

Quantity | 0.241 | 0.021 | 0.142 | 0.142 | 0.125 |

(0.192) | (0.040) | (0.102) | (0.140) | (0.139) | |

Software | -0.370 | 0.093 | -0.140 | -0.015 | -0.002 |

(0.298) | (0.045) | (0.121) | (0.107) | (0.087) | |

Experiment | -0.155 | 0.011 | -0.018 | 0.021 | 0.054 |

(0.237) | (0.033) | (0.087) | (0.124) | (0.074) | |

ChatGPT | -0.011 | -0.025 | 0.004 | -0.102 | -0.084 |

(0.208) | (0.039) | (0.107) | (0.093) | (0.079) | |

Num.Obs. | 1008 | 1008 | 1008 | 1008 | 1008 |

AIC | 2085.3 | 20784.7 | 497.2 | 142.0 | 84.1 |

Notes: The estimated 'overall' effect is the result of a regression of the estimate of the impact of GenAI on productivity, measured as percentage changes in productivity, on a constant, the square of the standard error of the estimate (to capture publication bias) and several study characteristics. The different models make different assumptions about the error terms as described in the text. | |||||

Table V shows it’s hard to conclude much about what drives heterogeneity as all estimated coefficients are insignificant. That being said, in terms of size of the estimated effects, the impact on quantity seems substantially bigger (about 12.5 percentage points) than the impact on quality. If we set the other characteristics at their mean value and the standard error squared at 0 (a so-called best practice regression), the average for qualitative studies is relatively small (6.9%) and insignificant (se=(9.6%) ), while the average for quantitative studies is sizeable at 19.4% and significant (se=(5.7%)).

Similarly, relative to other GenAI tools, the impact of ChatGPT seems smaller ((about -8.4 percentage points)) than the impact of other GenAI tools. If we set the other characteristics at their mean value and the standard error squared at 0, the average for ChatGPT is small (12.5%, se=(3.7%) ), while the average for other GenAI tools is more sizeable at 20.9% (se=(6.8%)).

Finally, the estimated size of the impact of industry or method is relatively small.

Of course, one should be careful in interpreting these differences, not only because we find they are statistically insignificant, but also because omitted variables could be correlated both with the effect size and the included characteristics.

VIII: Using Fisher Z measures rather than % changes

In the above analysis, we used estimated percentage changes as variable of interest. Not all studies provide estimates that can be transformed into percentage changes in productivity, for example because the outcomes measures used are standardized values. Similarly, not all studies provide the mean level of outcome without AI, for all of the regressions used in the study.

As an alternative, we therefore repeat the above regression using Fisher Z measures. Fisher Z is a transformation of the partial correlation coefficient r, the latter being a function of the t statistic and the degrees of freedom.

\[ r_i = \frac{t_i}{\sqrt{t_i^2 + (df_i)}} \]

\[ Z_I = \frac{1}{2} \ln \left( \frac{1+r_i}{1-r_i} \right) \]

The Fisher Z statistics can be categorized as “small”, “medium” and “large” effects based on cut-off values from Xue, Reed, and Aert (2024).

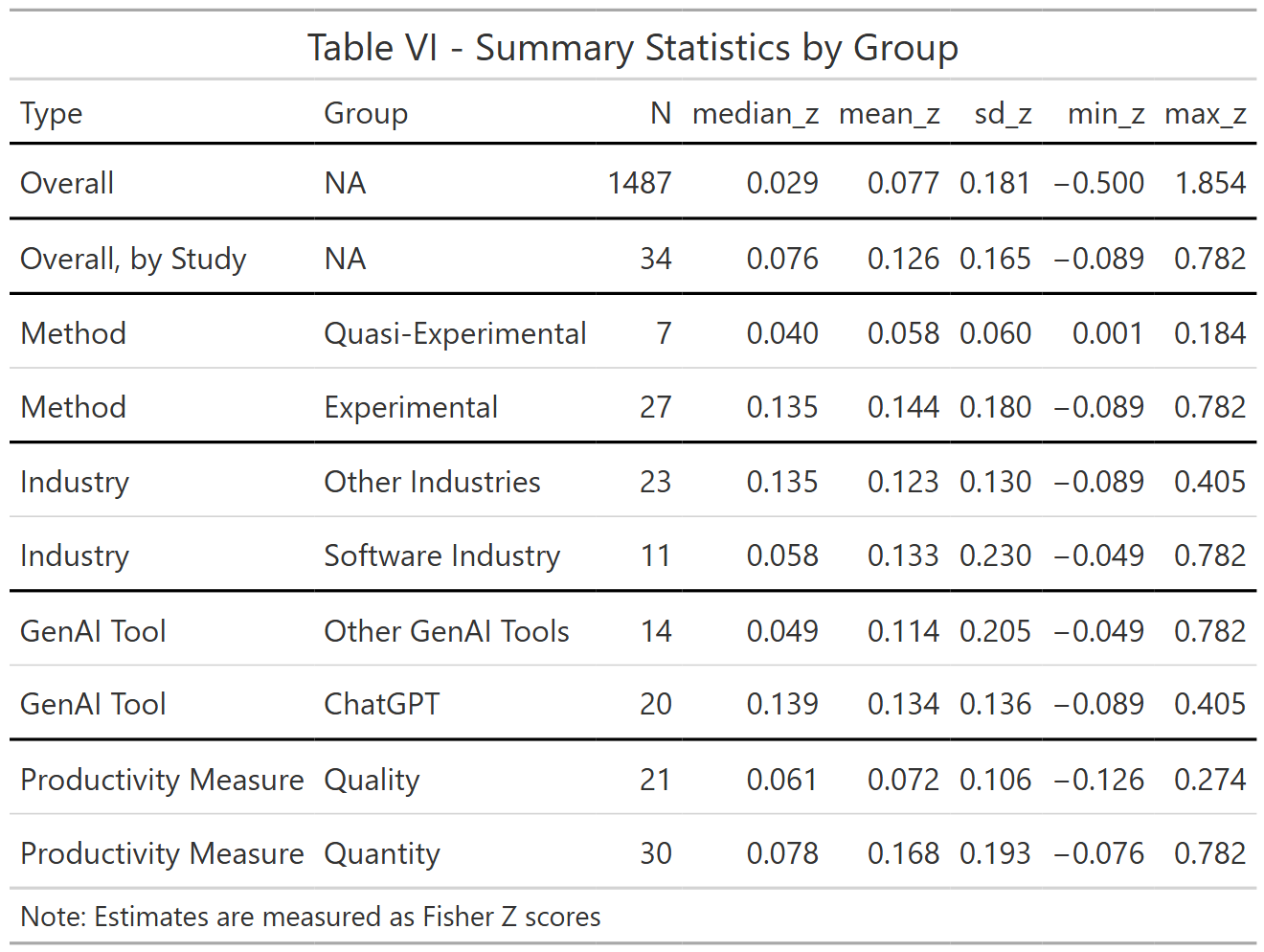

Table V shows descriptive stats, like table I: we now have about 50% more estimates, about 1500 estimates compared to about 1000 in table I. The median Fisher Z value is about 0.029 and the mean about 0.077. Different studies have different number of estimates so in the overall average, the studies with more estimates get higher weight. If we give each study the same weight, by taking the median estimate within each study and then computing descriptive statistics, we get a median Fisher Z score of 0.076. Xue, Reed, and Aert (2024) suggest thresholds of 0.1, 0.23 and 0.41 for small, medium and large Fisher Z values, suggesting the partial correlations we find between productivity and GenAI use are relatively small.

Like table I, table VI again suggest that experimental studies and quantitative outcomes show a stronger association between productivity and GenAI use. However, in contrast to table I, studies evaluating ChatGPT, rather than other GenAI tools, seem to show stronger associations with productivity.

IX. Weighting Estimates - Fisher Z

Table VII - Averaging Estimates using Various Weights % | |||||

|---|---|---|---|---|---|

| OLS | Fixed Effects | Random Effects | 3L Effects | 3L-VCV Effects |

overall | 0.077 | 0.011 | 0.063 | 0.122 | 0.116 |

(0.027**) | (0.003*) | (0.023*) | (0.028***) | (0.025***) | |

Num.Obs. | 1487 | 1487 | 1487 | 1487 | 1487 |

AIC | -859.3 | 60352.7 | -1424.4 | -2334.5 | -2298.5 |

Notes: The estimated 'overall' effect is the result of a regression of a Fisher Z score, on a constant. The different models make different assumptions about the error terms as described in the text. | |||||

Table VII reweighs the Fisher Z estimates, giving higher weights to more precise estimates. The model with the lowest AIC is now the 3-level method (without VCV, so putting rho at zero) suggest the overall average Fisher Z score to be about 0.122 which suggest a medium effect. Of course, this estimate does not account for publication bias.

X. Correcting for Publication Bias - Fisher Z

Table VIII shows some signs of publication bias, though the publication bias is insignificant for the models with the lowest AIC score.

Table VIII - Publication Bias % - PET | |||||

|---|---|---|---|---|---|

| OLS | Fixed Effects | Random Effects | 3L Effects | 3L-VCV Effects |

intercept | 0.018 | 0.006 | 0.016 | 0.098 | 0.099 |

(0.019) | (0.003) | (0.016) | (0.031**) | (0.030**) | |

Standard Error | 1.815 | 2.405 | 1.895 | 0.387 | 0.298 |

(0.288***) | (0.831*) | (0.533**) | (0.648) | (0.580) | |

Num.Obs. | 1487 | 1487 | 1487 | 1487 | 1487 |

AIC | -1269.3 | 54006.7 | -1665.1 | -2334.3 | -2297.5 |

Notes: The estimated 'overall' effect is the result of a regression of the Fisher Z score on a constant and the standard error of the Fisher Z score as a control variable, to capture publication bias. The different models make different assumptions about the error terms as described in the text. | |||||

Controlling for publication bias reduces the association between GenAI and productivity somewhat to about 0.098

XI. PEESE Analysis - Fisher Z

Having confirmed an effect is likely to exist, we next run the PEESE procedure which controls for the squared standard error (instead of just the standard error).

Table IX - Publication Bias - PEESE | |||||

|---|---|---|---|---|---|

| OLS | Fixed Effects | Random Effects | 3L Effects | 3L-VCV Effects |

intercept | 0.063 | 0.010 | 0.054 | 0.112 | 0.116 |

(0.024**) | (0.003*) | (0.022*) | (0.024***) | (0.024***) | |

St. Error^2 | 4.061 | 19.169 | 6.586 | 1.293 | -0.034 |

(0.815***) | (12.454) | (2.578) | (0.795) | (0.923) | |

Num.Obs. | 1487 | 1487 | 1487 | 1487 | 1487 |

AIC | -1115.5 | 58476.1 | -1552.2 | -2334.5 | -2296.5 |

Notes: The estimated 'overall' effect is the result of a regression of the estimate of the impact of GenAI in productivity, measured as percentage changes in productivity, on a constant and the square of the standard error of the estimate, to control for publication bias. The different models make different assumptions about the error terms as described in the text. | |||||

Interestingly, we again find less evidence of publication bias once we control for the square of the error term, and the best estimates of the overall effect again gain in size to a moderate effect (0.1).

XII. Heterogeneity Analysis - Fisher Z

Interestingly, a heterogeneity analysis suggest little difference in partial correlation between GenAI tools use and outcomes measured in quality or quantity, between different industries or GenAI tools.

Table X - Heterogeneity Analysis % | |||||

|---|---|---|---|---|---|

| OLS | Fixed Effects | Random Effects | 3L Effects | 3L-VCV Effects |

intercept | 0.040 | 0.033 | 0.047 | 0.035 | 0.030 |

(0.053) | (0.019) | (0.052) | (0.032) | (0.032) | |

St. Error^2 | 3.946 | 18.859 | 6.317 | 1.009 | -0.280 |

(0.760***) | (12.427) | (2.032) | (0.833) | (1.070) | |

Quantity | -0.020 | 0.007 | -0.026 | -0.006 | -0.005 |

(0.048) | (0.010) | (0.044) | (0.014) | (0.015) | |

Software | 0.001 | -0.011 | -0.016 | 0.031 | 0.030 |

(0.041) | (0.017) | (0.030) | (0.060) | (0.058) | |

Experiment | 0.049 | -0.016 | 0.030 | 0.081 | 0.086 |

(0.044) | (0.008) | (0.042) | (0.043+) | (0.042+) | |

ChatGPT | 0.005 | -0.018 | 0.012 | 0.018 | 0.025 |

(0.041) | (0.020) | (0.036) | (0.044) | (0.044) | |

Num.Obs. | 1487 | 1487 | 1487 | 1487 | 1487 |

AIC | -1148.4 | 52758.2 | -1607.1 | -2328.5 | -2290.9 |

Notes: The estimated 'overall' effect is the result of a regression of the Fisher Z score, on a constant, the square of the standard error of the Fisher Z score (to capture publication bias) and several study characteristics. The different models make different assumptions about the error terms as described in the text. | |||||

But there is evidence that experimental studies have about 8.6 percentage points Fisher Z scores than quasi-experimental studies.

Conclusions

In the short period, about two years, since Generative AI tools like CoPilot and ChatGPT have been launched, a flurry of papers analyzing the impact of these tools on productivity in the workplace have been written. By now, these studies span a large number of settings, from writing software, doing data analysis, developing marketing ads, doing legal analysis to writing blogs or stories. In this paper, we summarize this literature and perform a meta-analysis on more than 1000 estimates from 34 studies.

We find that the vast majority of studies finds a positive impact of the use of GenAI tools on productivity, though about a third warn that GenAI tools can have negative effects for the productivity of some people or some outcomes.

Our analysis finds little evidence that publication bias is a major factor in this literature and that there is substantial heterogeneity in the estimated effect size. Our preferred specifications (3-level models) suggest that the overall average effect, across studies, of GenAI use on productivity is about 17%. We further find that association between GenAI use and productivity as measured by a Fisher Z score (which is based on the partial correlation) is about 0.11, which can be categorized as a medium effect.

Differences in study or estimate characteristics have limited power in explaining differences across estimated effect sizes. Estimates that measure the quantitative impact of GenAI tools are on average, 12.5 percentage points higher than estimates that measure the qualitative impact, though this difference is not statistically significant. Similarly, the Fisher Z score does not show much difference between qualitative than quantitative studies. We do find, however, that Fisher Z scores (but not percentage changes) are significantly higher for experimental studies than for quasi-experimental studies.

While about a third of the studies we analyzed explicitly indicated they used GenAI tools, none of these studies provided an estimate of how much time they saved or how much the quality of their paper increased as the result of using these tools. This study, and our experience writing this paper confirms this, suggest 17% is a reasonable estimate. That being said, this review is a review of the early studies of GenAI on productivity. As GenAi tools might continue to get better, updating this meta-analysis in the future might well be possible with much less help of the current authors.

List of Studies Used in the Meta-Analysis.

Alrefai and Alsadi (2024), Asam and Heller (2024), Brynjolfsson, Li, and Raymond (2024), Butler et al. (2024), Campero et al. (2022), Chen and Chan (2024), Choi, Monahan, and Schwarcz (2023), Cui et al. (2024), Dell’Acqua et al. (2024), Dell’Acqua et al. (2025), Doshi and Hauser (2024), Edelman, Ngwe, and Peng (2023), Fitzpatrick et al. (2025), Freeman et al. (2024), Fu et al. (2024), Gambacorta et al. (2024), Haslberger, Gingrich, and Bhatia (2024), Hoffmann et al. (2024), Kaisen, Li, and Lu (2024), Kreitmeir and Raschky (2024), Merali (2024), Miroyan et al. (2025), Mozannar et al. (2024), Ni et al. (2024) Noy and Zhang (2023), Otis et al. (2024), Peng et al. (2023), Quispe and Grijalba (2024), Spatharioti et al. (2025), W. Wang et al. (2024), R. E. Wang et al. (2025), Weber et al. (2024), Wiles et al. (2024), Yeverechyahu, Mayya, and Oestreicher-Singer (2024), Yu and Qi (2024).

References.

Footnotes

The Boolean search query used in Scopus was “TITLE-ABS-KEY (”Gen* AI”) AND TITLE-ABS-KEY (productivity)”, where the asterisk functions as a wildcard to capture variations such as “gen” and “generative”, or other synonyms beginning with “gen”.↩︎

Extracting estimates from papers is not always straightforward as some papers provide figures rather than tables, or in the case of interaction terms, it is unclear how to code the estimated effect as it depends on the values of other variables. We have an excel spreadsheet that explains for each paper what we included and did not include. In general, we do not code data from figures except if no other estimates are available for that study and the figures are sufficiently detailed to get reasonable guesses of the values.↩︎

2 studies provide the mean outcome rather than the mean for the base category. Since those studies use the mean outcome to compute percentage changes, we do so too for those 2 studies. To compute the standard error of the percentage change, we multiply the percentage change by the ratio of the estimated standard error and the estimated effect size. This underestimates the true standard error (which would take into account the standard error of the base category mean). However, most studies don’t report that standard error, and studies that do refer to percentage changes, typically implicitly assume that there is no such variation when discussing results. Our approach is thus consistent with what the typical study reports.↩︎

the I2 statistic equals 99.951 which measures the importance of the random effects suggest almost all of the variance comes from the variance of the random effect (\(\tau^2\)) rather than sampling variance.↩︎

If we winsorize the estimates at the 1 and 99% percentile, we get very similar results.↩︎